OpenEvolve for Quasi-Monte Carlo Design

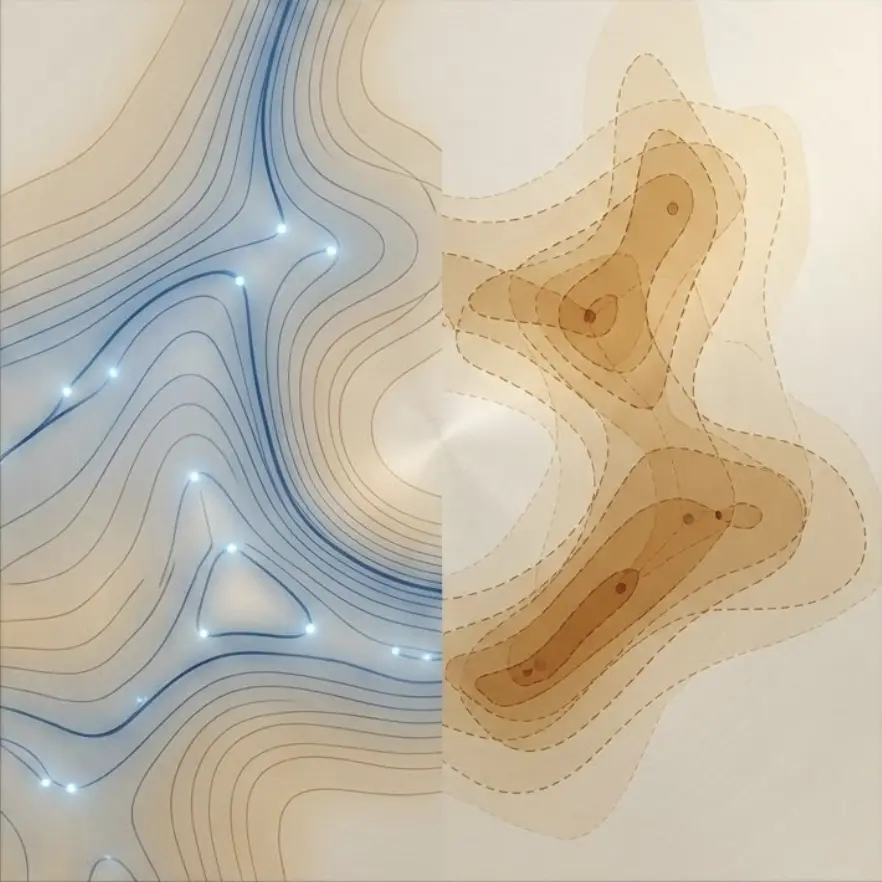

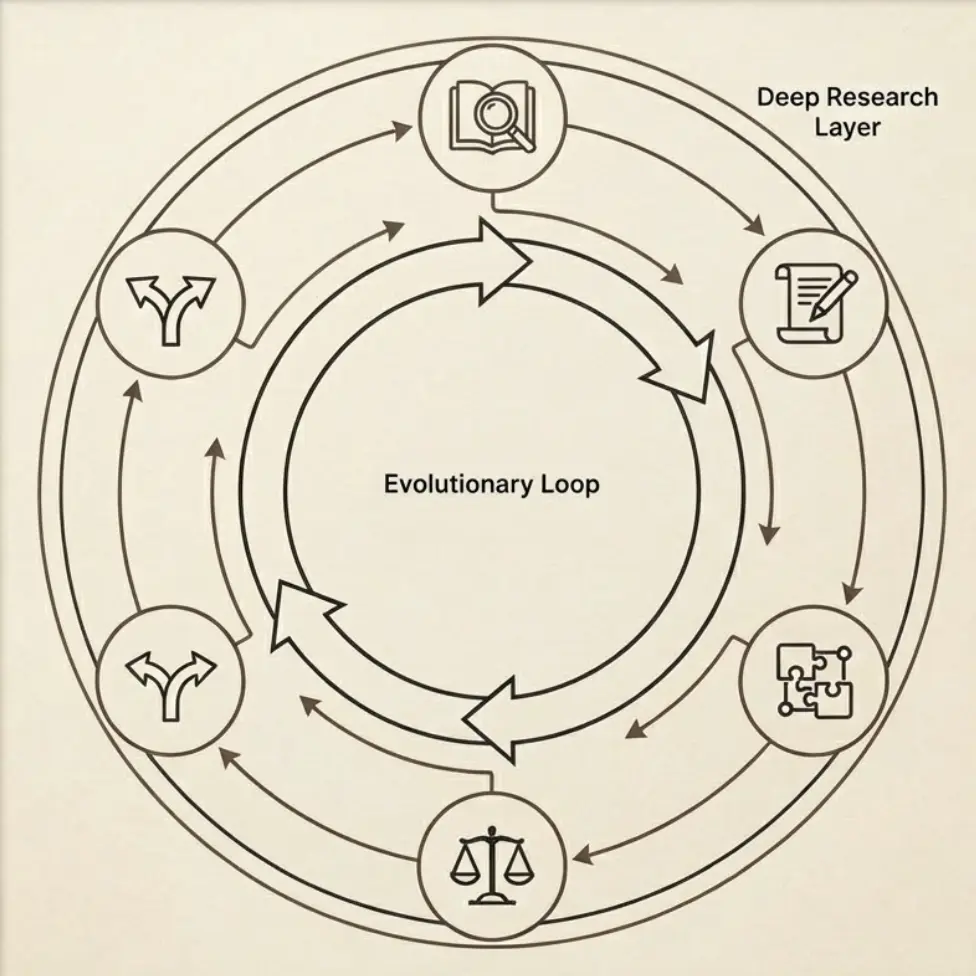

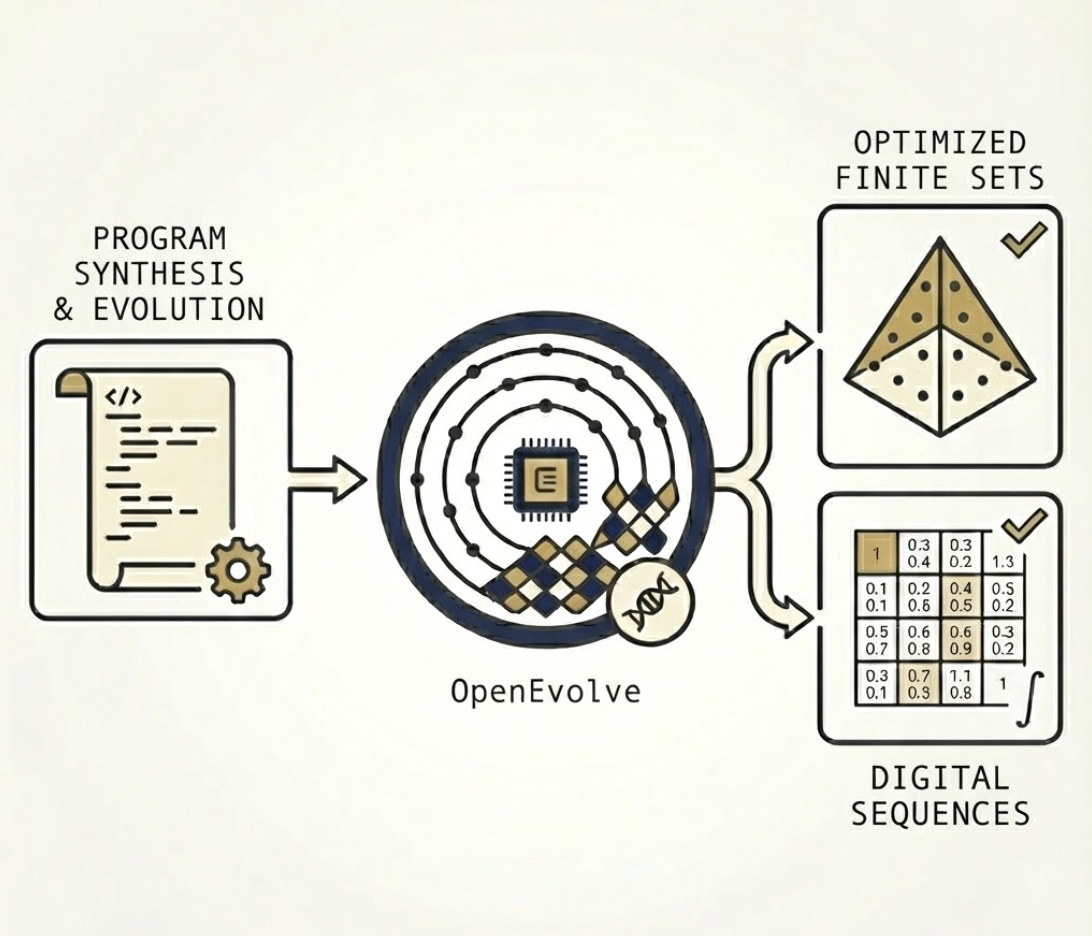

Quasi-Monte Carlo (QMC) methods generate "low-discrepancy" point sets — mathematical constructions that fill a multi-dimensional space more uniformly and consistently than the independent and identically distributed points used in standard Monte Carlo simulations. With these structured point sets, QMC allows researchers to solve complex integration problems (such as pricing financial options or simulating physical systems) with significantly lower variance, greater accuracy, and faster convergence. OpenEvolve helped advance this field by treating QMC design as a program synthesis problem: instead of humans manually tweaking formulas, an LLM-driven evolutionary search was used to write and refine code that generates these point sets. This approach successfully discovered new, record-breaking 2D and 3D point sets with lower "star discrepancy" (a measure of uniformity) than previously known configurations. Additionally, OpenEvolve optimized Sobol' direction numbers, which are the "instructions" for generating high-quality sequences; the resulting AI-evolved parameters significantly reduced error in 32-dimensional financial option-pricing simulations compared to long-standing industry standards, such as Joe–Kuo. Full details in the paper and code.